Udacity Self-Driving Car Project #4: Advanced Lane Lines Finding

Overview

The goals of this project is to write a software pipeline to identify the lane boundaries in a video using more advanced algorithms (comparing to the simple one Udacity Self-Driving Car Project #1).

Final video:

Code is here.

Usage: python preprocess.py project_video.mp4

Steps are the following:

- Compute the camera calibration matrix and distortion coefficients given a set of chessboard images.

- Apply a distortion correction to raw images.

- Use color transforms, gradients, etc., to create a thresholded binary image.

- Apply a perspective transform to rectify binary image (“birds-eye view”).

- Detect lane pixels and fit to find the lane boundary.

- Determine the curvature of the lane and vehicle position with respect to center.

- Warp the detected lane boundaries back onto the original image.

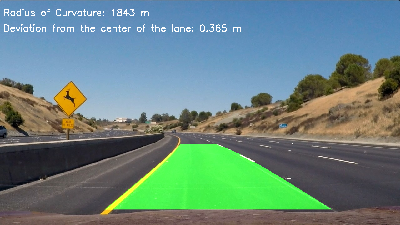

- Output visual display of the lane boundaries and numerical estimation of lane curvature and vehicle position.

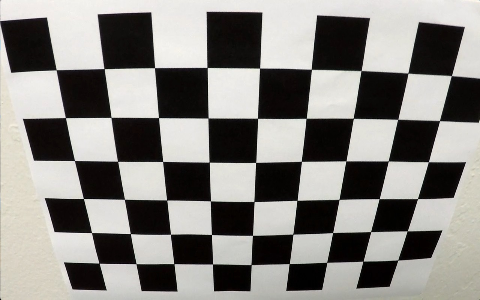

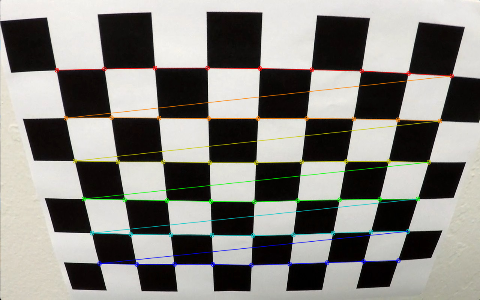

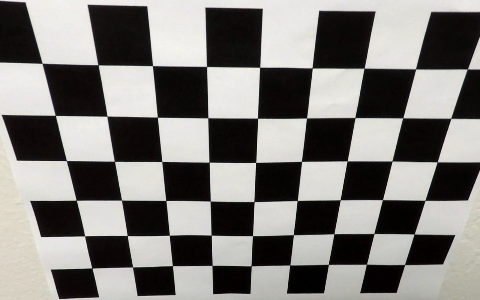

Camera calibration and distortion correction

Pinhole cameras produce distorted images. With camera calibration we can determine the relation between the camera’s natural units (pixels) and the real world units (for example millimeters) and undistort the images. (More details in

OpenCV documentation).

First of all we detect chessboard pattern in the calibrated images with cv2.findChessboardCorners. Then using obtained 3d points in real world space and 2d points in image plane calculate the camera matrix and distortion matrix with cv2.calibrateCamera function. Finally, to undistort the image we use distortion matrix with cv2.undistort function.

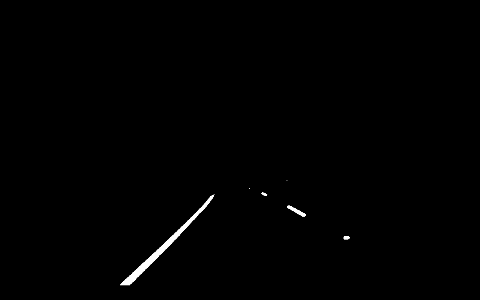

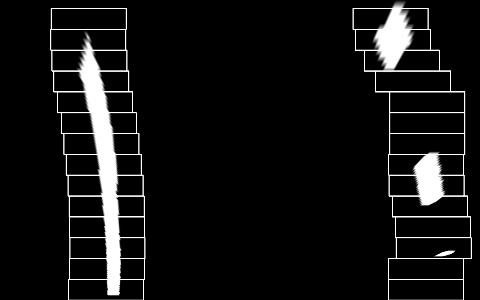

Creating a binary image

To create a binary representation of an image we will use several methods: extract yellow and white color masks, perform Sobel operator on the image and threshold the result. Then combined the results and perform morphological closing operation to highlight the lane lines.

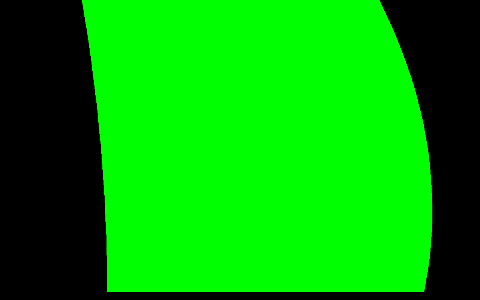

Applying perspective transform

Detect the lane boundaries

Determine the curvature of the lane and vehicle position with respect to center.

The curvature calculation method is described here. Assume that the camera is located at the center on the car. Then the car’s deviation from the center of the lane can be calculated |image_center - lane_center|. To convert pixels to meters I used an assumption that the lane is about 30 meters long and 3.7 meters wide (provided by Udacity).

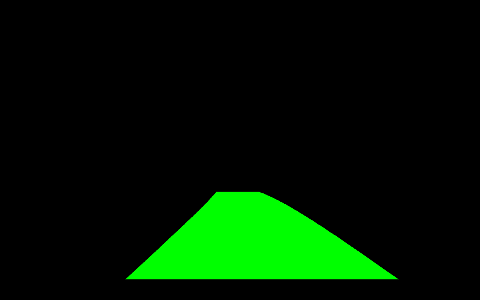

Warp the detected lane boundaries back onto the original image. Final result.

Summary

The most important part of the task is to create a binarized image as accurate as possible. Because the next processing steps (and the final results) entirely depend on the input image.